Build a file sharing app with built-in payments via Polar

Create a WeTransfer-style app with subscriptions and object storage

Building a file sharing service requires handling file uploads, storage, download links, authentication, and monetization. In this tutorial, we'll build a WeTransfer-style app where anyone can upload files up to 100MB for free, and users can sign up and subscribe for larger files and longer retention.

We'll use BetterAuth for authentication, Polar for payment processing and subscriptions, and Encore's object storage for file management. You'll learn how to implement tiered features based on authentication and subscription status.

What we're building

A WeTransfer-style file sharing service with a freemium SaaS model:

Free Tier (no signup required):

- Upload files up to 100MB

- Files stored for 7 days

- Instant shareable download links

Premium Tier ($10/month via Polar):

- Upload files up to 5GB

- Files stored for 30 days

- Same fast, secure infrastructure

Architecture

We'll build:

- Auth service with BetterAuth for user authentication

- Files service with object storage bucket for file uploads/downloads

- Payments service with Polar integration for subscriptions

- Frontend service with static HTML/JS interface

- PostgreSQL databases for users, subscriptions, and file metadata

- Webhook handler to sync subscription changes from Polar

- Tier-based access control enforcing upload size limits and retention

What is Polar?

Polar is a merchant of record platform designed for developers. It handles:

- Subscription Management - Recurring billing with automatic renewals

- Tax Compliance - Worldwide tax calculation and remittance

- Customer Portal - Self-service subscription management

- Webhooks - Real-time subscription events

- Developer-First - Clean API and excellent docs

Polar handles payments, invoicing, and tax compliance so you can focus on your product.

Getting started

encore app create --example=ts/polar-file-sharing to start with a complete working example. This tutorial walks through building it from scratch to understand each component.First, install Encore if you haven't already:

# macOS

brew install encoredev/tap/encore

# Linux

curl -L https://encore.dev/install.sh | bash

# Windows

iwr https://encore.dev/install.ps1 | iex

Create a new Encore application. This will prompt you to create a free Encore account if you don't have one (required for secret management):

encore app create file-sharing-app --example=ts/hello-world

cd file-sharing-app

Setting up Polar

Creating your Polar sandbox account

For development and testing, start with Polar's sandbox environment:

- Go to sandbox.polar.sh and sign up for a free sandbox account

- Create an organization (your company/product name)

- Navigate to Settings → General → scroll down to Developer section

- Click New Token and copy your access token

The sandbox lets you test the entire payment flow without processing real payments. When you're ready for production, use the same account at polar.sh to get your production access token.

Polar charges 4% + 40¢ per transaction with no monthly fees, making it one of the most affordable merchant of record services.

Installing the Polar SDK

Install the Polar SDK:

npm install @polar-sh/sdk

Building the file service

Creating the files service

We'll start with the core feature: file uploads and downloads. Every Encore service starts with a service definition:

// files/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("files");

Setting up object storage

File sharing services need to store user uploads. With Encore, you can create object storage by simply defining a bucket in your code. The framework automatically provisions the infrastructure locally using a storage emulator:

// files/bucket.ts

import { Bucket } from "encore.dev/storage/objects";

export const uploads = new Bucket("uploads", {

public: false, // Files are private and require our API to download

});

This creates a storage bucket that's accessible from anywhere in your application.

Storing file metadata in PostgreSQL

While the file bytes go in object storage, we need to track metadata: who uploaded each file, when it expires, file size, and tier. Create a database to store this information:

// files/db.ts

import { SQLDatabase } from "encore.dev/storage/sqldb";

export const db = new SQLDatabase("files", {

migrations: "./migrations",

});

This creates a PostgreSQL database for file metadata. Now define the schema with a migration file:

-- files/migrations/1_create_files.up.sql

CREATE TABLE files (

id TEXT PRIMARY KEY,

filename TEXT NOT NULL,

size_bytes BIGINT NOT NULL,

content_type TEXT NOT NULL,

uploaded_by TEXT NOT NULL, -- customer_id from Polar

storage_key TEXT NOT NULL, -- Path in the object storage bucket

expires_at TIMESTAMP NOT NULL, -- When the file should be deleted

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);

-- Index for querying a user's files

CREATE INDEX idx_files_uploaded_by ON files(uploaded_by);

-- Index for finding expired files to clean up

CREATE INDEX idx_files_expires_at ON files(expires_at);

The schema is straightforward: each file gets a unique ID, stores metadata about the file itself (name, size, type), tracks who uploaded it, points to where the file lives in object storage, and records when it should expire based on the user's tier.

Building the file upload endpoint

Now for the core feature: allowing users to upload files. This endpoint implements a freemium SaaS model where:

- Anonymous users can upload files up to 100MB without signing up

- Authenticated free users get the same 100MB limit

- Authenticated premium users can upload files up to 5GB

The endpoint needs to:

- Accept binary file data (not JSON)

- Optionally validate authentication (not required for free uploads)

- Check subscription tier for authenticated users

- Enforce size limits before accepting the upload

- Store the file in object storage

- Save metadata to the database

- Calculate expiration date based on tier

Since we're handling binary data (the actual file bytes), we'll use Encore's raw endpoint feature for direct access to the HTTP request and response objects:

// files/upload.ts

import { api, APIError } from "encore.dev/api";

import { uploads } from "./bucket";

import { db } from "./db";

import { checkSubscriptionByUserId } from "../payments/subscriptions";

import { db as authDb } from "../auth/db";

import { session, user } from "../auth/schema";

import { eq } from "drizzle-orm";

import log from "encore.dev/log";

const MAX_FREE_SIZE = 100 * 1024 * 1024; // 100MB

const MAX_PREMIUM_SIZE = 5 * 1024 * 1024 * 1024; // 5GB

const FREE_RETENTION_DAYS = 7;

const PREMIUM_RETENTION_DAYS = 30;

interface UploadResponse {

fileId: string;

downloadUrl: string;

expiresAt: Date;

tier: "free" | "premium";

}

// Helper function to validate auth token (returns null if no auth)

async function validateToken(

authHeader: string | undefined

): Promise<{ userId: string; email: string } | null> {

if (!authHeader || !authHeader.startsWith("Bearer ")) {

return null; // No auth provided - allow anonymous uploads

}

const token = authHeader.replace("Bearer ", "");

// Query session

const sessionRows = await authDb

.select({

userId: session.userId,

expiresAt: session.expiresAt,

})

.from(session)

.where(eq(session.token, token))

.limit(1);

const sessionRow = sessionRows[0];

if (!sessionRow) {

throw APIError.unauthenticated("invalid session");

}

if (new Date(sessionRow.expiresAt) < new Date()) {

throw APIError.unauthenticated("session expired");

}

// Get user

const userRows = await authDb

.select({ id: user.id, email: user.email })

.from(user)

.where(eq(user.id, sessionRow.userId))

.limit(1);

const userRow = userRows[0];

if (!userRow) {

throw APIError.unauthenticated("user not found");

}

return { userId: userRow.id, email: userRow.email };

}

export const upload = api.raw(

{

expose: true,

path: "/upload",

method: "POST",

bodyLimit: 6 * 1024 * 1024 * 1024, // 6GB to accommodate premium tier

},

async (req, res) => {

try {

// Validate authentication (optional - anonymous uploads allowed)

const authHeader = Array.isArray(req.headers.authorization)

? req.headers.authorization[0]

: req.headers.authorization;

const auth = await validateToken(authHeader);

const userId = auth?.userId || `anon_${Date.now()}_${Math.random().toString(36).substr(2, 9)}`;

const userEmail = auth?.email || "anonymous";

log.info("File upload started", {

userId,

email: userEmail,

isAuthenticated: !!auth

});

// Check subscription status for authenticated users

let isPremium = false;

if (auth) {

const subscription = await checkSubscriptionByUserId(auth.userId);

isPremium = subscription.hasActiveSubscription;

}

// Read file from request

const chunks: Buffer[] = [];

for await (const chunk of req) {

chunks.push(chunk);

}

const fileBuffer = Buffer.concat(chunks);

const fileSize = fileBuffer.length;

// Check size limits

const maxSize = isPremium ? MAX_PREMIUM_SIZE : MAX_FREE_SIZE;

const tier: "free" | "premium" = isPremium ? "premium" : "free";

if (fileSize > maxSize) {

res.writeHead(413, { "Content-Type": "application/json" });

res.end(

JSON.stringify({

error: "file_too_large",

maxSize: maxSize,

tier: tier,

upgradeUrl: "https://your-polar-org.polar.sh",

})

);

return;

}

// Generate file ID and storage key

const fileId = `file_${Date.now()}_${Math.random().toString(36).substr(2, 9)}`;

const filename =

(Array.isArray(req.headers["x-filename"])

? req.headers["x-filename"][0]

: req.headers["x-filename"]) || "upload";

const contentType =

(Array.isArray(req.headers["content-type"])

? req.headers["content-type"][0]

: req.headers["content-type"]) || "application/octet-stream";

const storageKey = `${userId}/${fileId}`;

// Upload to bucket

await uploads.upload(storageKey, fileBuffer, {

contentType,

});

// Calculate expiration

const retentionDays = isPremium ? PREMIUM_RETENTION_DAYS : FREE_RETENTION_DAYS;

const expiresAt = new Date();

expiresAt.setDate(expiresAt.getDate() + retentionDays);

// Save metadata

await db.exec`

INSERT INTO files (id, filename, size_bytes, content_type, uploaded_by, storage_key, expires_at)

VALUES (${fileId}, ${filename}, ${fileSize}, ${contentType}, ${userId}, ${storageKey}, ${expiresAt})

`;

log.info("File uploaded successfully", {

fileId,

userId,

fileSize,

tier

});

// Return download URL

const baseUrl = process.env.ENCORE_API_URL || "http://localhost:4000";

const response: UploadResponse = {

fileId,

downloadUrl: `${baseUrl}/download/${fileId}`,

expiresAt,

tier,

};

res.writeHead(200, { "Content-Type": "application/json" });

res.end(JSON.stringify(response));

} catch (error) {

log.error("Upload failed", { error });

if (error instanceof APIError) {

res.writeHead(error.httpStatus, { "Content-Type": "application/json" });

res.end(JSON.stringify({ error: error.message }));

} else {

res.writeHead(500, { "Content-Type": "application/json" });

res.end(JSON.stringify({ error: "internal server error" }));

}

}

}

);

This implementation allows anyone to upload files up to 100MB without authentication. Authenticated premium users get increased limits. This is the freemium SaaS model: start using immediately, upgrade for more features.

Building the file download endpoint

Downloading files should be straightforward: given a file ID, retrieve the file from object storage and serve it to the user. However, we need to enforce a few rules:

- Verify the file exists in our database

- Check that the file hasn't expired

- Fetch the file from object storage

- Serve it with the correct content type and filename

Downloads are available to anyone with the link (like WeTransfer), but expired files are automatically inaccessible. This is another raw endpoint since we're serving binary data:

// files/download.ts

import { api, APIError } from "encore.dev/api";

import { uploads } from "./bucket";

import { db } from "./db";

interface DownloadRequest {

fileId: string;

}

export const download = api.raw(

{ expose: true, path: "/download/:fileId", method: "GET" },

async (req, res) => {

// Extract fileId from URL

const url = new URL(req.url!, `http://${req.headers.host}`);

const fileId = url.pathname.split("/")[2];

// Get file metadata

const file = await db.queryRow<{

filename: string;

content_type: string;

storage_key: string;

expires_at: Date;

size_bytes: number;

}>`

SELECT filename, content_type, storage_key, expires_at, size_bytes

FROM files

WHERE id = ${fileId}

`;

if (!file) {

res.writeHead(404, { "Content-Type": "application/json" });

res.end(JSON.stringify({ error: "file not found" }));

return;

}

// Check expiration

if (new Date() > file.expires_at) {

res.writeHead(410, { "Content-Type": "application/json" });

res.end(JSON.stringify({ error: "file expired" }));

return;

}

// Download from bucket

const fileData = await uploads.download(file.storage_key);

// Serve file

res.writeHead(200, {

"Content-Type": file.content_type,

"Content-Disposition": `attachment; filename="${file.filename}"`,

"Content-Length": file.size_bytes,

});

res.end(fileData);

}

);

Setting up authentication with BetterAuth

Before we add payments, let's implement user authentication. This allows users to create accounts, sign in, and manage their subscriptions. We'll use BetterAuth, a modern TypeScript authentication framework.

Why authentication? While anonymous users can upload files (100MB limit), authenticated users can:

- Upgrade to premium for larger files (up to 5GB)

- Link their Polar subscription to their account

- Seamlessly manage billing through Polar

Installing BetterAuth and Drizzle

BetterAuth works with Drizzle ORM for type-safe database queries. Install the required dependencies:

npm install better-auth drizzle-orm pg npm install -D drizzle-kit @types/pg

We're using Drizzle ORM because it integrates seamlessly with Encore's PostgreSQL databases while providing excellent TypeScript support.

Creating the auth service

Create a new service for authentication:

// auth/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("auth");

Defining the auth database schema

BetterAuth needs tables for users, sessions, accounts, and verification tokens. First, define the Drizzle schema:

// auth/schema.ts

import { pgTable, text, timestamp, boolean } from "drizzle-orm/pg-core";

export const user = pgTable("user", {

id: text("id").primaryKey(),

name: text("name").notNull(),

email: text("email").notNull().unique(),

emailVerified: boolean("emailVerified").notNull().default(false),

image: text("image"),

createdAt: timestamp("createdAt").notNull().defaultNow(),

updatedAt: timestamp("updatedAt").notNull().defaultNow(),

});

export const session = pgTable("session", {

id: text("id").primaryKey(),

expiresAt: timestamp("expiresAt").notNull(),

token: text("token").notNull().unique(),

createdAt: timestamp("createdAt").notNull().defaultNow(),

updatedAt: timestamp("updatedAt").notNull().defaultNow(),

ipAddress: text("ipAddress"),

userAgent: text("userAgent"),

userId: text("userId")

.notNull()

.references(() => user.id, { onDelete: "cascade" }),

});

export const account = pgTable("account", {

id: text("id").primaryKey(),

accountId: text("accountId").notNull(),

providerId: text("providerId").notNull(),

userId: text("userId")

.notNull()

.references(() => user.id, { onDelete: "cascade" }),

accessToken: text("accessToken"),

refreshToken: text("refreshToken"),

idToken: text("idToken"),

accessTokenExpiresAt: timestamp("accessTokenExpiresAt"),

refreshTokenExpiresAt: timestamp("refreshTokenExpiresAt"),

scope: text("scope"),

password: text("password"),

createdAt: timestamp("createdAt").notNull().defaultNow(),

updatedAt: timestamp("updatedAt").notNull().defaultNow(),

});

export const verification = pgTable("verification", {

id: text("id").primaryKey(),

identifier: text("identifier").notNull(),

value: text("value").notNull(),

expiresAt: timestamp("expiresAt").notNull(),

createdAt: timestamp("createdAt"),

updatedAt: timestamp("updatedAt"),

});

Now create the database instance and Drizzle connection:

// auth/db.ts

import { SQLDatabase } from "encore.dev/storage/sqldb";

import { drizzle } from "drizzle-orm/node-postgres";

import { Pool } from "pg";

import * as schema from "./schema";

export const DB = new SQLDatabase("auth", {

migrations: "./migrations",

});

// Create Drizzle instance

const pool = new Pool({

connectionString: DB.connectionString,

});

export const db = drizzle(pool, { schema });

Create the SQL migration file:

-- auth/migrations/1_create_auth_tables.up.sql

CREATE TABLE IF NOT EXISTS "user" (

"id" TEXT PRIMARY KEY NOT NULL,

"name" TEXT NOT NULL,

"email" TEXT NOT NULL UNIQUE,

"emailVerified" BOOLEAN NOT NULL DEFAULT false,

"image" TEXT,

"createdAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

"updatedAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE IF NOT EXISTS "session" (

"id" TEXT PRIMARY KEY NOT NULL,

"expiresAt" TIMESTAMP NOT NULL,

"token" TEXT NOT NULL UNIQUE,

"createdAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

"updatedAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

"ipAddress" TEXT,

"userAgent" TEXT,

"userId" TEXT NOT NULL REFERENCES "user"("id") ON DELETE CASCADE

);

CREATE TABLE IF NOT EXISTS "account" (

"id" TEXT PRIMARY KEY NOT NULL,

"accountId" TEXT NOT NULL,

"providerId" TEXT NOT NULL,

"userId" TEXT NOT NULL REFERENCES "user"("id") ON DELETE CASCADE,

"accessToken" TEXT,

"refreshToken" TEXT,

"idToken" TEXT,

"accessTokenExpiresAt" TIMESTAMP,

"refreshTokenExpiresAt" TIMESTAMP,

"scope" TEXT,

"password" TEXT,

"createdAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

"updatedAt" TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE IF NOT EXISTS "verification" (

"id" TEXT PRIMARY KEY NOT NULL,

"identifier" TEXT NOT NULL,

"value" TEXT NOT NULL,

"expiresAt" TIMESTAMP NOT NULL,

"createdAt" TIMESTAMP,

"updatedAt" TIMESTAMP

);

This migration runs when your app starts.

Configuring BetterAuth

Now configure BetterAuth with email/password authentication:

// auth/better-auth.ts

import { betterAuth } from "better-auth";

import { Pool } from "pg";

import { DB } from "./db";

// For local testing, hardcode the secret

// TODO: In production, use Encore's secret management

const authSecret = "your-secret-key-here-replace-in-production";

// Create a PostgreSQL pool for BetterAuth

const pool = new Pool({

connectionString: DB.connectionString,

});

// Create BetterAuth instance

export const auth = betterAuth({

database: pool,

secret: authSecret,

emailAndPassword: {

enabled: true,

requireEmailVerification: false, // Set to true in production

},

session: {

expiresIn: 60 * 60 * 24 * 7, // 7 days

updateAge: 60 * 60 * 24, // Update session every 24 hours

},

});

Security note: For production, use Encore's secrets management instead of hardcoding the secret. Run encore secret set --dev BetterAuthSecret for local development (or --prod for production) and use secret("BetterAuthSecret")() in your code.

Implementing authentication endpoints

Create the signup, signin, and signout endpoints:

// auth/auth.ts

import { api } from "encore.dev/api";

import { auth } from "./better-auth";

import log from "encore.dev/log";

// Register a new user

interface SignUpRequest {

email: string;

password: string;

name: string;

}

interface AuthResponse {

user: {

id: string;

email: string;

name: string;

};

session: {

token: string;

expiresAt: Date;

};

}

export const signUp = api(

{ expose: true, method: "POST", path: "/auth/signup" },

async (req: SignUpRequest): Promise<AuthResponse> => {

log.info("User signup attempt", { email: req.email });

const result = await auth.api.signUpEmail({

body: {

email: req.email,

password: req.password,

name: req.name,

},

});

if (!result.user || !result.token) {

throw new Error("Failed to create user");

}

return {

user: {

id: result.user.id,

email: result.user.email,

name: result.user.name,

},

session: {

token: result.token,

expiresAt: new Date(Date.now() + 60 * 60 * 24 * 7 * 1000),

},

};

}

);

// Sign in existing user

interface SignInRequest {

email: string;

password: string;

}

export const signIn = api(

{ expose: true, method: "POST", path: "/auth/signin" },

async (req: SignInRequest): Promise<AuthResponse> => {

log.info("User signin attempt", { email: req.email });

const result = await auth.api.signInEmail({

body: {

email: req.email,

password: req.password,

},

});

if (!result.user || !result.token) {

throw new Error("Invalid credentials");

}

return {

user: {

id: result.user.id,

email: result.user.email,

name: result.user.name,

},

session: {

token: result.token,

expiresAt: new Date(Date.now() + 60 * 60 * 24 * 7 * 1000),

},

};

}

);

// Sign out user

interface SignOutRequest {

token: string;

}

export const signOut = api(

{ expose: true, method: "POST", path: "/auth/signout" },

async (req: SignOutRequest): Promise<{ success: boolean }> => {

await auth.api.signOut({

body: { token: req.token },

});

return { success: true };

}

);

Creating the auth handler

To protect endpoints and make authenticated user data available throughout your app, create an auth handler:

// auth/handler.ts

import { APIError, Gateway, Header } from "encore.dev/api";

import { authHandler } from "encore.dev/auth";

import { db } from "./db";

import { session, user } from "./schema";

import { eq } from "drizzle-orm";

import log from "encore.dev/log";

// Define what we extract from the Authorization header

interface AuthParams {

authorization: Header<"Authorization">;

}

// Define what authenticated data we make available to endpoints

export interface AuthData {

userID: string;

email: string;

name: string;

}

const myAuthHandler = authHandler(

async (params: AuthParams): Promise<AuthData> => {

const token = params.authorization.replace("Bearer ", "");

if (!token) {

throw APIError.unauthenticated("no token provided");

}

try {

// Query the session from the database

const sessionRows = await db

.select({

userId: session.userId,

expiresAt: session.expiresAt,

})

.from(session)

.where(eq(session.token, token))

.limit(1);

const sessionRow = sessionRows[0];

if (!sessionRow) {

throw APIError.unauthenticated("invalid session");

}

// Check if session is expired

if (new Date(sessionRow.expiresAt) < new Date()) {

throw APIError.unauthenticated("session expired");

}

// Get user info

const userRows = await db

.select({

id: user.id,

email: user.email,

name: user.name,

})

.from(user)

.where(eq(user.id, sessionRow.userId))

.limit(1);

const userRow = userRows[0];

if (!userRow) {

throw APIError.unauthenticated("user not found");

}

return {

userID: userRow.id,

email: userRow.email,

name: userRow.name,

};

} catch (e) {

log.error(e);

throw APIError.unauthenticated("invalid token", e as Error);

}

}

);

// Create gateway with auth handler

export const gateway = new Gateway({ authHandler: myAuthHandler });

Now any endpoint can require authentication by setting auth: true, and access user data with getAuthData().

Setting up payments with Polar

Now that we have authentication, let's add the subscription system using Polar.

Creating the payments service

// payments/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("payments");

Configuring Polar authentication

To communicate with Polar's API, you need to authenticate using an API key. Encore's secrets management keeps sensitive values out of your codebase.

Secrets are defined in code but their values are set separately for each environment (development, staging, production). This means you can safely commit your code to version control without exposing credentials.

Create a file to configure the Polar SDK:

// payments/polar.ts

import { Polar } from "@polar-sh/sdk";

import { secret } from "encore.dev/config";

// Define the secret - the actual value is set per environment

const polarAccessToken = secret("PolarAccessToken");

// Use sandbox for development, production for prod

const server = process.env.ENCORE_ENVIRONMENT === "production"

? "production"

: "sandbox";

// Initialize the Polar SDK with your API key

export const polar = new Polar({

accessToken: polarAccessToken(),

server: server, // Sandbox for local dev, production when deployed

});

This configuration uses Polar's sandbox environment for local development and testing, switching to production when you deploy. You can test the full payment flow without processing real payments.

Now set your Polar API key for your local development environment:

# Development (uses Polar Sandbox)

encore secret set --dev PolarAccessToken

# Paste your sandbox access token

# Production (when you're ready to deploy)

encore secret set --prod PolarAccessToken

# Paste your production access token

The code detects the environment and uses the appropriate Polar endpoint (sandbox or production).

Setting up the payments database

The payments service needs its own database to track subscription state. While Polar is the source of truth for billing, we maintain a local copy of subscription data for fast access control checks. This way, when a user tries to upload a large file, we can instantly verify their tier without making an API call to Polar on every request.

Each Encore service can have its own database, keeping data logically separated. Define the payments database:

// payments/db.ts

import { SQLDatabase } from "encore.dev/storage/sqldb";

export const db = new SQLDatabase("payments", {

migrations: "./migrations",

});

This database will store customer information and subscription status, kept in sync with Polar via webhooks (which we'll implement shortly).

Creating the payments schema

We need two tables: one for customers and one for their subscriptions. This schema tracks the minimum information needed to make authorization decisions about file uploads.

Create the migration file:

-- payments/migrations/1_create_subscriptions.up.sql

CREATE TABLE customers (

customer_id TEXT PRIMARY KEY,

email TEXT NOT NULL,

name TEXT,

user_id TEXT, -- Link to auth.user.id

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

updated_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);

CREATE TABLE subscriptions (

subscription_id TEXT PRIMARY KEY,

customer_id TEXT NOT NULL REFERENCES customers(customer_id) ON DELETE CASCADE,

product_id TEXT NOT NULL,

status TEXT NOT NULL,

current_period_start TIMESTAMP,

current_period_end TIMESTAMP,

cancel_at_period_end BOOLEAN DEFAULT false,

created_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

updated_at TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

);

CREATE INDEX idx_subscriptions_customer ON subscriptions(customer_id);

CREATE INDEX idx_subscriptions_status ON subscriptions(status);

CREATE INDEX idx_customers_user_id ON customers(user_id);

The user_id column links Polar customers to authenticated users, allowing us to check subscription status for logged-in users.

Checking subscription status

Now that we're tracking subscriptions in our database, we need a way to check whether an authenticated user has an active premium subscription. This will be called before file uploads to determine size limits and retention period.

We'll create both an internal helper function and a protected API endpoint:

// payments/subscriptions.ts

import { api } from "encore.dev/api";

import { db } from "./db";

import { getAuthData } from "~encore/auth";

import log from "encore.dev/log";

export interface SubscriptionStatus {

hasActiveSubscription: boolean;

subscription?: {

id: string;

productId: string;

status: string;

currentPeriodEnd: Date;

};

}

// Internal helper function to check subscription by user ID

export async function checkSubscriptionByUserId(userId: string): Promise<SubscriptionStatus> {

log.info("Checking subscription for user", { userId });

// Find customer by user_id

const customer = await db.queryRow<{ customer_id: string }>`

SELECT customer_id

FROM customers

WHERE user_id = ${userId}

LIMIT 1

`;

if (!customer) {

log.info("No customer found for user", { userId });

return { hasActiveSubscription: false };

}

// Check for active subscription

const subscription = await db.queryRow<{

subscription_id: string;

product_id: string;

status: string;

current_period_end: Date;

}>`

SELECT subscription_id, product_id, status, current_period_end

FROM subscriptions

WHERE customer_id = ${customer.customer_id}

AND status = 'active'

AND (current_period_end IS NULL OR current_period_end > NOW())

ORDER BY created_at DESC

LIMIT 1

`;

if (!subscription) {

log.info("No active subscription found for user", { userId });

return { hasActiveSubscription: false };

}

log.info("Active subscription found", {

userId,

subscriptionId: subscription.subscription_id

});

return {

hasActiveSubscription: true,

subscription: {

id: subscription.subscription_id,

productId: subscription.product_id,

status: subscription.status,

currentPeriodEnd: subscription.current_period_end,

},

};

}

// API endpoint for frontend to check subscription status

export const checkSubscription = api(

{ auth: true, expose: true, method: "GET", path: "/subscriptions/me" },

async (): Promise<SubscriptionStatus> => {

const authData = getAuthData()!;

return checkSubscriptionByUserId(authData.userID);

}

);

This approach uses the authenticated user's ID to look up their Polar customer record and check subscription status.

Creating your Premium subscription product

Before users can subscribe, you need to create a product in Polar. A product represents what you're selling: in our case, "Premium File Sharing" with specific benefits (5GB uploads, 30-day retention).

You can create products either through the Polar Dashboard (easier) or programmatically via the API. Here's how to do it via the API:

// payments/products.ts

import { api } from "encore.dev/api";

import { polar } from "./polar";

interface CreatePremiumProductResponse {

productId: string;

checkoutUrl: string;

}

export const createPremiumProduct = api(

{ expose: true, method: "POST", path: "/products/premium" },

async (): Promise<CreatePremiumProductResponse> => {

const product = await polar.products.create({

name: "Premium File Sharing",

description: "Upload files up to 5GB, 30-day retention, no ads",

organizationId: "YOUR_ORG_ID", // Replace with your Polar org ID

prices: [

{

priceAmount: 999, // $9.99/month

priceCurrency: "USD",

recurring: {

interval: "month",

},

},

],

});

return {

productId: product.id,

checkoutUrl: `https://polar.sh/checkout/${product.id}`,

};

}

);

Tip: You can also create products directly in the Polar Dashboard which is often easier.

Handling subscription webhooks

When a user subscribes, cancels, or updates their subscription in Polar, how does our application know about it? This is where webhooks come in.

Polar sends HTTP POST requests to a webhook endpoint we provide whenever subscription events occur. These webhooks contain data about what changed, and we use them to keep our local database in sync with Polar's records.

This architecture means:

- Fast access checks (query our local database, not Polar's API)

- No polling needed (Polar pushes updates to us in real-time)

- Resilience if Polar is temporarily unavailable (we have cached subscription state)

Let's implement the webhook handler:

// payments/webhooks.ts

import { api } from "encore.dev/api";

import { db } from "./db";

import { db as authDb } from "../auth/db";

import { user } from "../auth/schema";

import { eq } from "drizzle-orm";

import log from "encore.dev/log";

interface PolarWebhookEvent {

type: string;

data: {

object: {

id: string;

customer_id: string;

product_id: string;

status: string;

current_period_start?: string;

current_period_end?: string;

cancel_at_period_end?: boolean;

customer?: {

id: string;

email: string;

name?: string;

};

};

};

}

export const handleWebhook = api.raw(

{ expose: true, path: "/webhooks/polar", method: "POST" },

async (req, res) => {

// Read and parse request body

const chunks: Buffer[] = [];

for await (const chunk of req) {

chunks.push(chunk);

}

const bodyText = Buffer.concat(chunks).toString();

const event = JSON.parse(bodyText) as PolarWebhookEvent;

log.info("Received Polar webhook", { type: event.type });

switch (event.type) {

case "subscription.created":

case "subscription.updated":

await syncSubscription(event.data.object);

break;

case "subscription.canceled":

await cancelSubscription(event.data.object.id);

break;

case "customer.created":

case "customer.updated":

await syncCustomer(event.data.object.customer);

break;

}

res.writeHead(200);

res.end();

}

);

async function syncSubscription(subscription: any) {

// Ensure customer exists

if (subscription.customer) {

await syncCustomer(subscription.customer);

}

// Parse timestamps from ISO strings to Date objects

const periodStart = subscription.current_period_start

? new Date(subscription.current_period_start)

: null;

const periodEnd = subscription.current_period_end

? new Date(subscription.current_period_end)

: null;

// Upsert subscription

await db.exec`

INSERT INTO subscriptions (

subscription_id, customer_id, product_id, status,

current_period_start, current_period_end, cancel_at_period_end, updated_at

)

VALUES (

${subscription.id},

${subscription.customer_id},

${subscription.product_id},

${subscription.status},

${periodStart},

${periodEnd},

${subscription.cancel_at_period_end || false},

NOW()

)

ON CONFLICT (subscription_id)

DO UPDATE SET

status = ${subscription.status},

current_period_start = ${periodStart},

current_period_end = ${periodEnd},

cancel_at_period_end = ${subscription.cancel_at_period_end || false},

updated_at = NOW()

`;

log.info("Synced subscription", { subscriptionId: subscription.id });

}

async function syncCustomer(customer: any) {

if (!customer) return;

// Look up user by email in auth database

const userRows = await authDb

.select({ id: user.id })

.from(user)

.where(eq(user.email, customer.email))

.limit(1);

const userId = userRows[0]?.id || null;

if (userId) {

log.info("Linking Polar customer to user", {

customerId: customer.id,

userId,

email: customer.email

});

} else {

log.warn("No user found for Polar customer email", {

customerId: customer.id,

email: customer.email

});

}

await db.exec`

INSERT INTO customers (customer_id, email, name, user_id, updated_at)

VALUES (${customer.id}, ${customer.email}, ${customer.name}, ${userId}, NOW())

ON CONFLICT (customer_id)

DO UPDATE SET

email = ${customer.email},

name = ${customer.name},

user_id = ${userId},

updated_at = NOW()

`;

}

async function cancelSubscription(subscriptionId: string) {

await db.exec`

UPDATE subscriptions

SET status = 'canceled', updated_at = NOW()

WHERE subscription_id = ${subscriptionId}

`;

log.info("Canceled subscription", { subscriptionId });

}

Important: In production, verify webhook signatures to ensure requests are from Polar.

Configure the webhook URL in Polar Dashboard: https://your-domain.com/webhooks/polar

Creating checkout sessions for users to subscribe

When an authenticated user wants to upgrade to premium, generate a Polar checkout URL. The checkout page is hosted by Polar and handles payment methods, tax calculation, and compliance. After payment, Polar redirects the user back to your app and sends a webhook to activate their subscription.

Let's create a protected endpoint that generates checkout URLs for authenticated users:

// payments/checkout.ts

import { api } from "encore.dev/api";

import { polar } from "./polar";

import { getAuthData } from "~encore/auth";

import log from "encore.dev/log";

interface CreateCheckoutRequest {

productPriceId: string;

}

interface CreateCheckoutResponse {

checkoutUrl: string;

}

export const createCheckout = api(

{ auth: true, expose: true, method: "POST", path: "/checkout" },

async (req: CreateCheckoutRequest): Promise<CreateCheckoutResponse> => {

const authData = getAuthData()!;

const baseUrl = process.env.ENCORE_API_URL || "http://localhost:4000";

try {

// Create a checkout session using the Polar SDK

// This automatically handles sandbox vs production environments

const session = await polar.checkouts.create({

productPriceId: req.productPriceId,

customerEmail: authData.email,

successUrl: `${baseUrl}/?success=true`,

});

log.info("Created Polar checkout session", {

sessionId: session.id,

userId: authData.userID,

email: authData.email,

});

return {

checkoutUrl: session.url || "",

};

} catch (error) {

log.error("Failed to create checkout", {

error,

productPriceId: req.productPriceId,

userId: authData.userID

});

throw new Error(`Failed to create checkout: ${error}`);

}

}

);

The endpoint is protected with auth: true, so only authenticated users can create checkouts. The user's email from their session is passed to the Polar checkout.

Testing your file sharing service locally

Time to see everything in action! Make sure Docker is running (Encore uses it to provision your local PostgreSQL databases and object storage), then start your backend:

encore run

Your API will be available at http://localhost:4000 and the development dashboard at http://localhost:9400.

1. Create a premium user via webhook simulation

Before we can test the tier differences, we need to simulate a user subscribing. In production, Polar would send this webhook automatically when someone completes checkout. For local testing, we'll send it manually using curl:

curl -X POST http://localhost:4000/webhooks/polar \

-H "Content-Type: application/json" \

-d '{

"type": "subscription.created",

"data": {

"object": {

"id": "sub_premium123",

"customer_id": "cus_premium",

"product_id": "prod_premium",

"status": "active",

"current_period_start": "2024-11-18T00:00:00Z",

"current_period_end": "2024-12-18T00:00:00Z",

"cancel_at_period_end": false,

"customer": {

"id": "cus_premium",

"email": "[email protected]",

"name": "Premium User"

}

}

}

}'

2. Test file upload as a free user

Now let's test the free tier limits. We'll create a 50MB file (under the 100MB free limit) and upload it as a non-subscribed user. This should succeed:

# Create a 50MB test file

dd if=/dev/zero of=test-50mb.bin bs=1M count=50

# Upload as free user

curl -X POST http://localhost:4000/upload \

-H "X-Customer-Id: cus_free" \

-H "X-Filename: test-50mb.bin" \

-H "Content-Type: application/octet-stream" \

--data-binary @test-50mb.bin

Response:

{

"fileId": "file_1234567890_abc123",

"downloadUrl": "http://localhost:4000/download/file_1234567890_abc123",

"expiresAt": "2024-11-25T00:00:00.000Z"

}

3. Test free tier upload limits

This is where it gets interesting. Let's try to upload a 150MB file as a free user. This should be rejected since it exceeds the 100MB free tier limit:

# Create a 150MB file (exceeds 100MB free limit)

dd if=/dev/zero of=test-150mb.bin bs=1M count=150

# Upload as free user

curl -X POST http://localhost:4000/upload \

-H "X-Customer-Id: cus_free" \

-H "X-Filename: test-150mb.bin" \

--data-binary @test-150mb.bin

Response (413 Payload Too Large):

{

"error": "file_too_large",

"maxSize": 104857600,

"tier": "free",

"upgradeUrl": "https://polar.sh/your-org/subscribe"

}

4. Test premium tier with large file upload

Now let's verify that premium users can upload larger files. Using the same 150MB file, but with our premium customer ID that we created via the webhook in step 1:

# Same 150MB file, but with premium customer ID

curl -X POST http://localhost:4000/upload \

-H "X-Customer-Id: cus_premium" \

-H "X-Filename: test-150mb.bin" \

--data-binary @test-150mb.bin

Success! Premium users can upload files up to 5GB.

5. Test file downloads

Finally, let's download one of the files we uploaded. Grab the downloadUrl from any successful upload response above and fetch it:

curl -O http://localhost:4000/download/file_1234567890_abc123

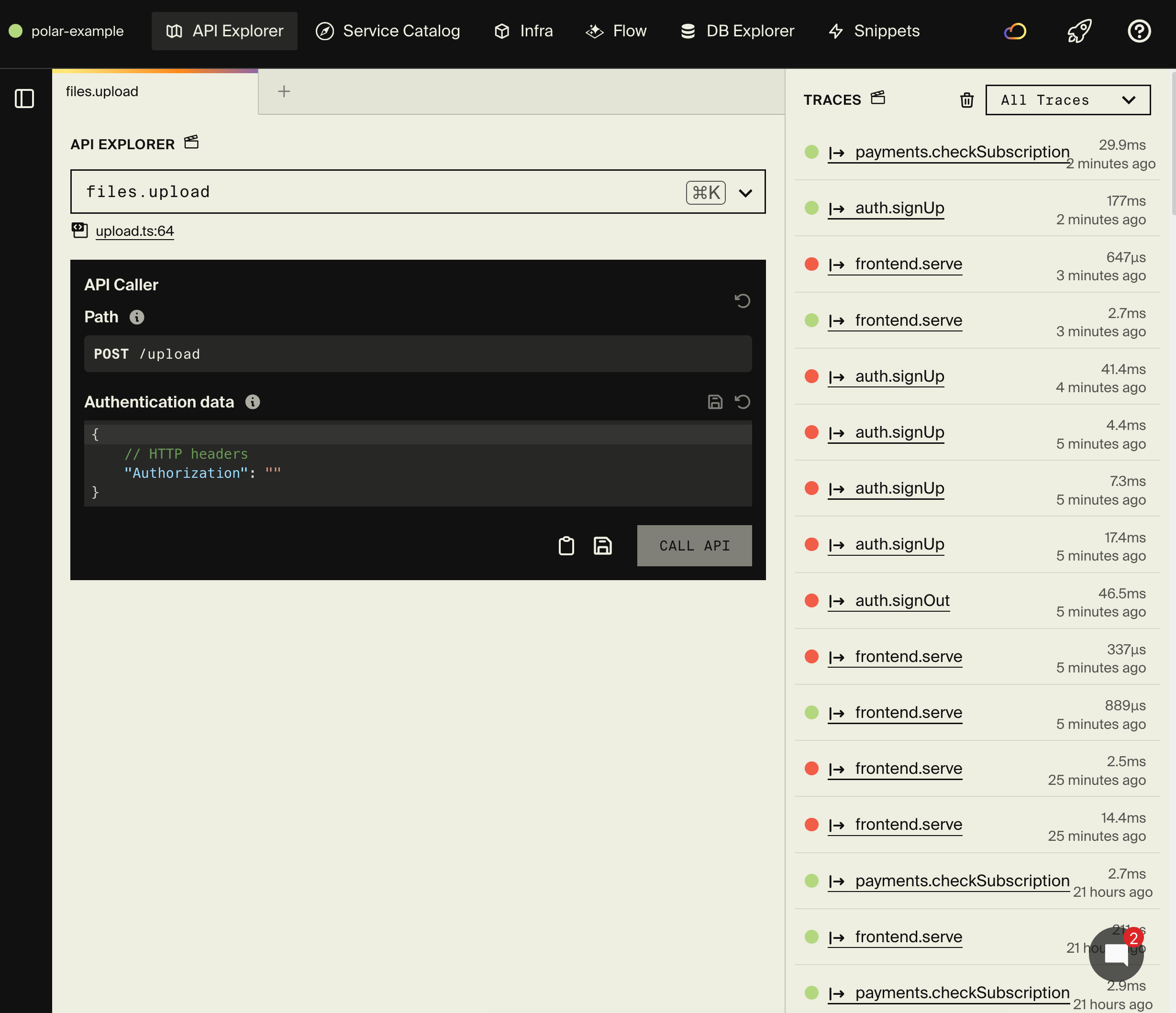

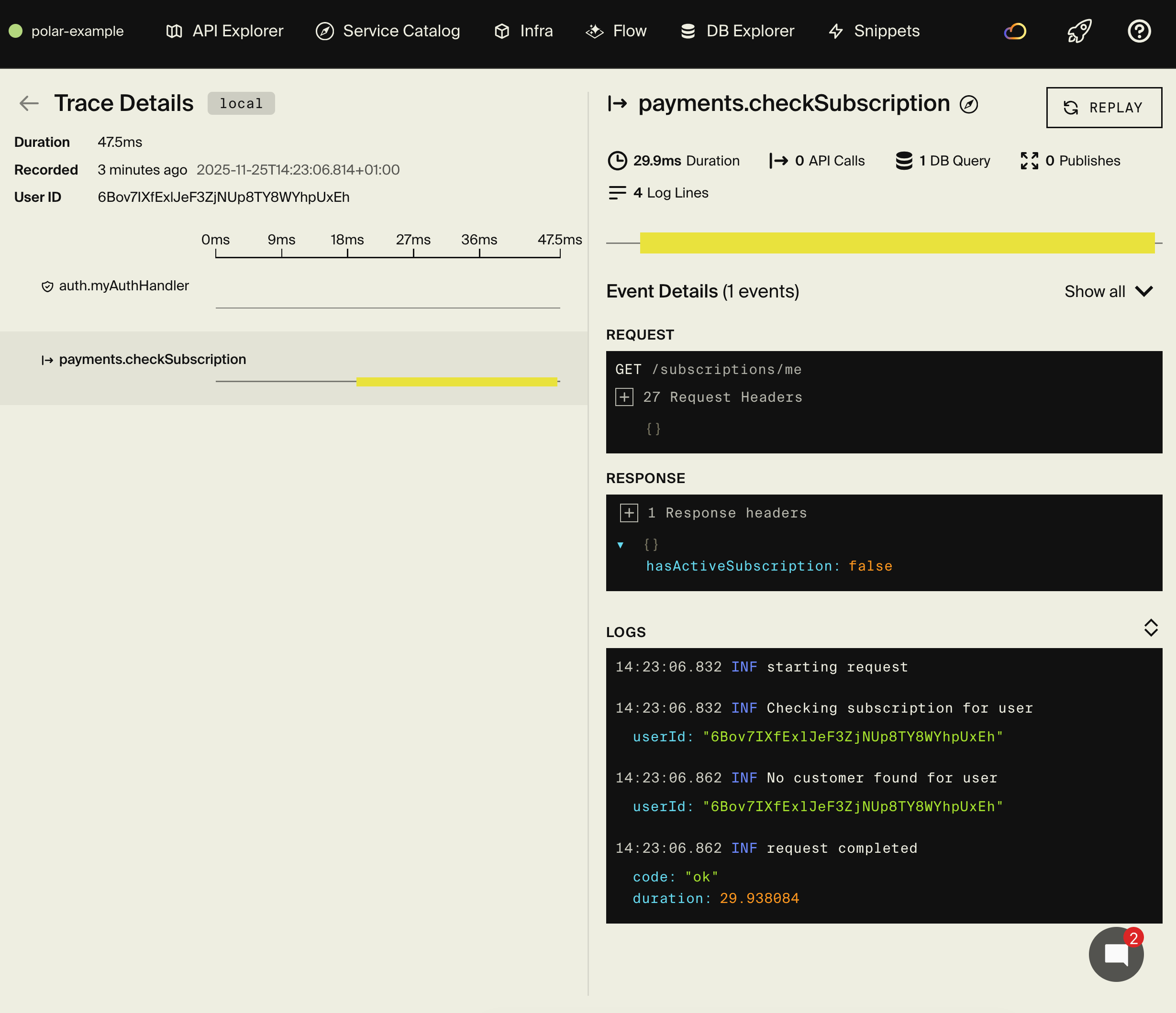

Exploring the local development dashboard

The local development dashboard at http://localhost:9400 provides observability and debugging tools for your Encore app.

API Explorer: Test endpoints interactively. Upload files, trigger webhooks, and see responses in real-time.

Service Catalog: Browse all your endpoints with auto-generated documentation derived from your TypeScript types. No need to manually write OpenAPI specs.

Architecture Diagram: See a visual representation of your microservices and how they communicate. Watch as your app grows from simple to complex.

Distributed Tracing: This is where Encore really shines. Click on any API call and see the complete flow: the request comes in, queries the database to check subscription status, uploads to the object storage bucket, inserts metadata, and returns. Every database query, every service call, with timing information. This is invaluable for understanding performance and debugging issues.

Database Explorer: Browse your tables with a built-in SQL IDE powered by Drizzle Studio. After running the tests above, you can:

- Query the

filestable to see uploaded files with their expiration dates - Check the

subscriptionstable to verify webhook data synced correctly - Compare retention periods: free tier files expire in 7 days, premium in 30 days

- Run custom SQL queries to debug data issues

Try clicking through the dashboard while testing your API. The tracing view will blow your mind.

Listing user files

Users should be able to see all the files they've uploaded, along with download links and expiration dates. This is a simple endpoint that queries the database and returns file metadata.

Note that we filter out expired files automatically - there's no point showing users files they can no longer download. A cron job (which we'll add later) can clean these up from storage and the database.

// files/list.ts

import { api } from "encore.dev/api";

import { db } from "./db";

interface ListFilesRequest {

customerId: string;

}

interface FileInfo {

id: string;

filename: string;

sizeBytes: number;

downloadUrl: string;

expiresAt: Date;

createdAt: Date;

}

interface ListFilesResponse {

files: FileInfo[];

}

export const listFiles = api(

{ expose: true, method: "GET", path: "/files/:customerId" },

async ({ customerId }: ListFilesRequest): Promise<ListFilesResponse> => {

const rows = await db.query<{

id: string;

filename: string;

size_bytes: number;

expires_at: Date;

created_at: Date;

}>`

SELECT id, filename, size_bytes, expires_at, created_at

FROM files

WHERE uploaded_by = ${customerId}

AND expires_at > NOW()

ORDER BY created_at DESC

`;

const files: FileInfo[] = [];

for await (const row of rows) {

files.push({

id: row.id,

filename: row.filename,

sizeBytes: row.size_bytes,

downloadUrl: `${process.env.ENCORE_API_URL || "http://localhost:4000"}/download/${row.id}`,

expiresAt: row.expires_at,

createdAt: row.created_at,

});

}

return { files };

}

);

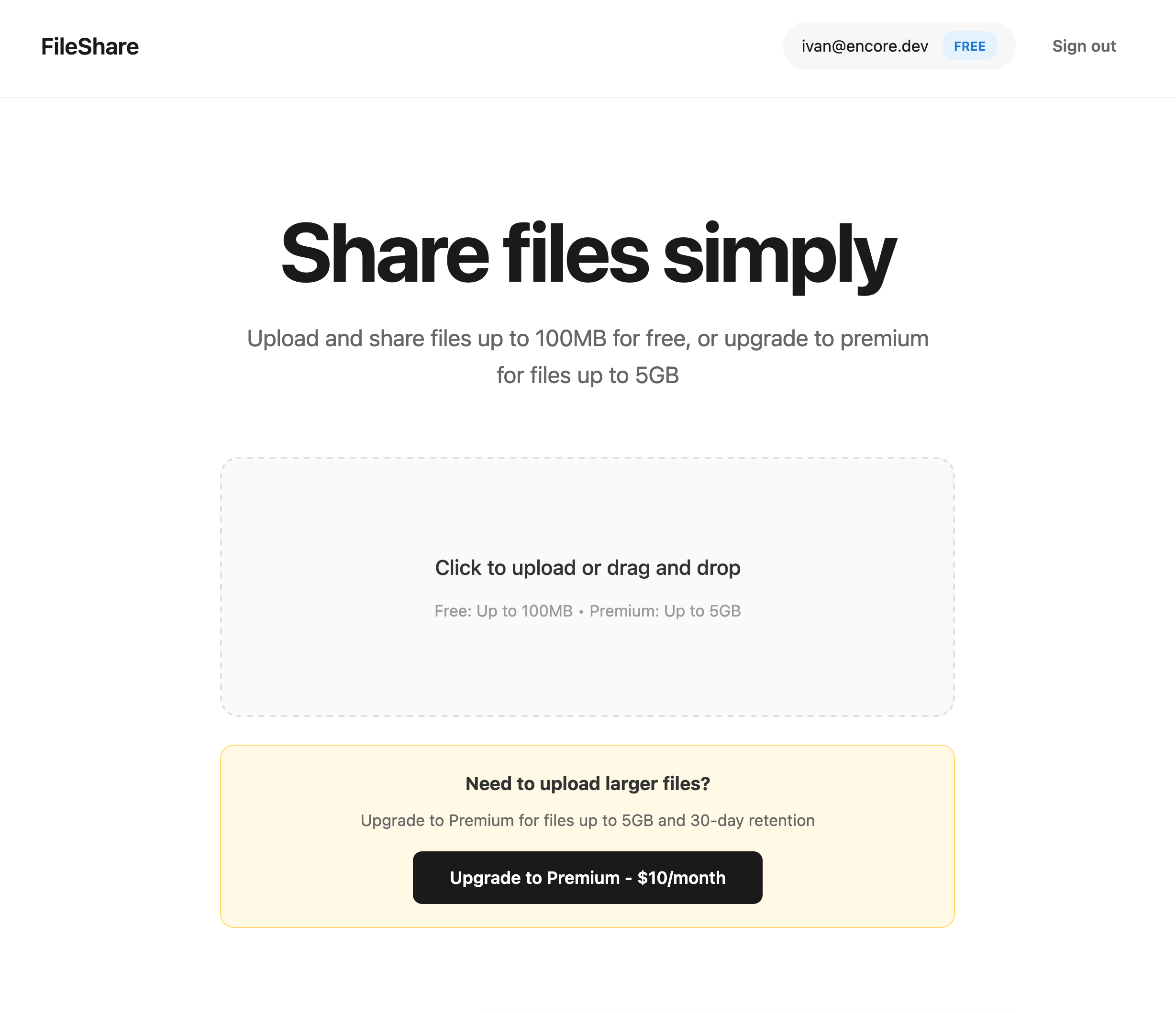

Building the frontend

Let's build a clean, WeTransfer-style frontend that showcases the freemium SaaS model. Users can immediately upload files without signing up, and authentication is only prompted when they need premium features.

Setting up the frontend service

Create a new service to serve static files:

// frontend/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("frontend");

Create the static file handler:

// frontend/frontend.ts

import { api } from "encore.dev/api";

export const serve = api.static({

expose: true,

path: "/*path",

dir: "./assets",

});

The path: "/*path" pattern serves as a fallback route, meaning it will match any path that doesn't match your API endpoints. This works great for single-page applications.

Creating the UI

Create a clean, single-screen landing page in frontend/assets/index.html with WeTransfer-style design. The complete frontend code is available in the example repository and includes:

- File upload with drag-and-drop support and progress tracking

- Modal-based authentication using the BetterAuth endpoints

- Tier detection that checks subscription status and shows appropriate limits

- Upgrade prompt with Polar checkout integration when users hit free tier limits

- Clean, minimal design with black accents and no scrolling

The frontend uses vanilla HTML, CSS, and JavaScript with no build step required.

Static files are served directly from Encore's Rust runtime with zero JavaScript execution, making them extremely fast. When you deploy with git push encore, your frontend deploys alongside your backend, giving you a single URL you can immediately share to demo your prototype.

Production frontend

For production applications with more complex needs (React, Next.js, build pipelines), we recommend deploying your frontend to Vercel, Netlify, or similar services and using the generated API client to call your Encore backend.

Generate the type-safe client:

encore gen client --lang=typescript --output=./frontend-client

This creates a fully typed API client matching your backend's TypeScript interfaces, giving you end-to-end type safety.

Testing the frontend

Restart your backend and visit http://localhost:4000:

encore run

Open http://localhost:4000 in your browser and you'll see your file sharing UI! Try:

- Uploading a small file (under 100MB) - it works!

- Uploading a large file (over 100MB) - see the upgrade prompt

- Viewing your uploaded files with expiration countdown

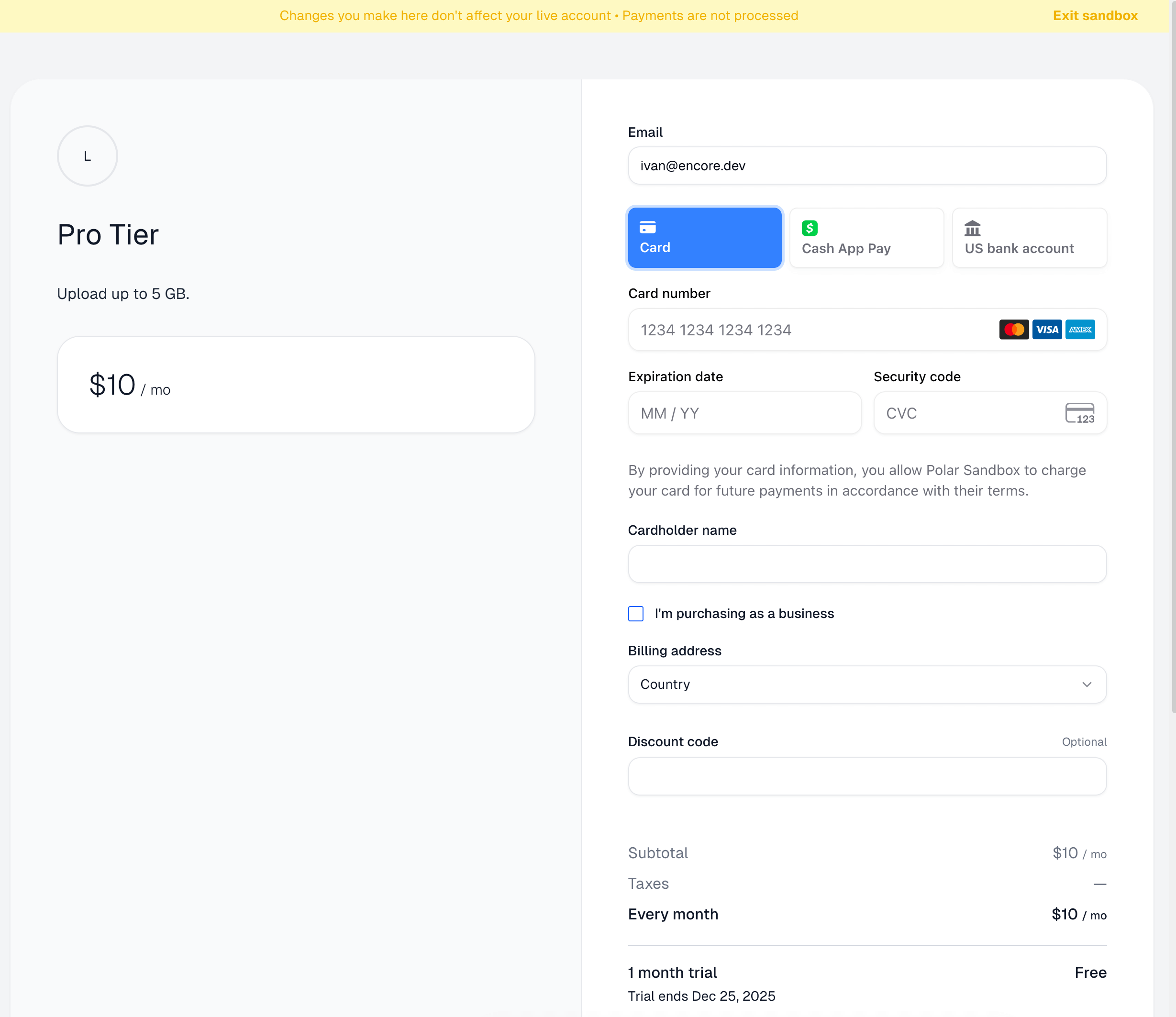

Testing the full checkout flow

To test the complete upgrade experience with Polar's sandbox:

1. Create a product in Polar sandbox:

Go to sandbox.polar.sh and create a "Premium" product:

- Navigate to Products → Create Product

- Name: "Premium File Sharing"

- Price: $9.99/month (or any amount)

- Copy the product ID (looks like

279910fa-6a8c-46d0-b196-84e98a891018)

2. Update your frontend to use your product ID:

Edit frontend/assets/index.html and replace the product ID in the upgradeToPremium function:

productId: '279910fa-6a8c-46d0-b196-84e98a891018', // Your actual product ID

3. Test the upgrade flow:

- Try to upload a file over 100MB as a free user

- See the upgrade banner appear

- Click "Upgrade for $9.99/month"

- You'll be redirected to Polar's sandbox checkout

- Use a test card like

4242 4242 4242 4242 - Complete the checkout (no real money charged!)

- Polar sends a webhook to your backend

- Try uploading the large file again - it should work!

This demonstrates the complete payment flow end-to-end using Polar's sandbox environment. Your users see a real checkout page, complete a real payment flow, and get upgraded to premium - all without processing actual payments.

Deployment

Deploy your file sharing service:

git add .

git commit -m "Add file sharing with Polar payments"

git push encore

Set your production secrets:

encore secret set --prod PolarAccessToken

encore secret set --prod BetterAuthSecret

Configure your Polar webhook URL in the Polar Dashboard:

https://your-production-domain.com/webhooks/polar

Important: Implement webhook signature verification to ensure requests are from Polar.

Note: Encore Cloud is great for prototyping and development with fair use limits. For production workloads, you can connect your AWS or GCP account and Encore will provision infrastructure directly in your cloud account (S3/Cloud Storage buckets, RDS/Cloud SQL databases, IAM roles). You can also self-host using Docker.

Advanced features

Sharing files with expiration links

Add shareable links with custom expiration dates:

import { api } from "encore.dev/api";

import { db } from "./db";

interface CreateShareLinkRequest {

fileId: string;

expiresInDays: number;

}

interface CreateShareLinkResponse {

shareUrl: string;

}

export const createShareLink = api(

{ expose: true, method: "POST", path: "/files/:fileId/share", auth: true },

async (req: CreateShareLinkRequest): Promise<CreateShareLinkResponse> => {

const shareToken = generateToken();

const expiresAt = new Date(Date.now() + req.expiresInDays * 24 * 60 * 60 * 1000);

await db.exec`

INSERT INTO file_shares (file_id, share_token, expires_at)

VALUES (${req.fileId}, ${shareToken}, ${expiresAt})

`;

return {

shareUrl: `https://yourapp.com/shared/${shareToken}`,

};

}

);

Before you deploy to production

Before going live, update these development defaults to production-ready configurations:

Security:

- Enable email verification: Change

requireEmailVerification: falsetotrueinauth/better-auth.ts - Use Encore secrets: Replace hardcoded

authSecretwithsecret("BetterAuthSecret")() - Verify webhook signatures: Implement Polar webhook signature verification in your webhook handler

- Production API keys: Run

encore secret set --prod PolarAccessTokenwith your production Polar API key

Infrastructure:

- Add file cleanup: Create a cron job that deletes expired files from object storage to manage costs

- Configure CORS: Update

encore.appwith your production frontend domain if using separate frontend - Rate limiting: Add upload rate limits to prevent abuse (use Encore middleware)

Monitoring:

- Set up alerts: Configure alerts in Encore Cloud for failed uploads, webhook errors, and storage usage

- Log aggregation: Enable log streaming to your preferred logging service

- Error tracking: Integrate error monitoring (Sentry, Rollbar, etc.)

Example file cleanup cron job:

// files/cleanup.ts

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

import { db } from "./db";

import { uploads } from "./bucket";

// Run daily at 2 AM to clean up expired files

const _ = new CronJob("file-cleanup", {

title: "Clean up expired files",

every: "24h",

endpoint: cleanupExpiredFiles,

});

export const cleanupExpiredFiles = api({}, async (): Promise<{ deleted: number }> => {

const expiredFiles = [];

const rows = db.query`

SELECT id, storage_key

FROM files

WHERE expires_at < NOW()

`;

for await (const row of rows) {

expiredFiles.push(row);

}

// Delete from storage

for (const file of expiredFiles) {

await uploads.remove(file.storage_key);

}

// Delete from database

await db.exec`DELETE FROM files WHERE expires_at < NOW()`;

return { deleted: expiredFiles.length };

});

Next steps

- Implement usage-based billing for storage

- Set up file preview generation for images and PDFs

- Add cron jobs to clean up expired files

- Implement rate limiting for uploads and downloads

If you found this tutorial helpful, consider starring Encore on GitHub to help others discover it.

This creates a functional file sharing interface with:

- Drag-and-drop file uploads

- Progress bar during uploads

- File list with expiration dates

- Copy-to-clipboard download links

- Upgrade prompts for free users hitting limits

- Responsive design that works on all devices

Static files are served directly from Encore's Rust runtime with zero JavaScript execution, making them extremely fast. When you deploy with git push encore, your frontend deploys alongside your backend, giving you a single URL you can immediately share to demo your app.

Testing the frontend

Restart your backend and visit http://localhost:4000:

encore run

Open http://localhost:4000 in your browser and you'll see your file sharing UI! Try:

- Uploading a small file (under 100MB) - it works!

- Uploading a large file (over 100MB) - see the upgrade prompt

- Viewing your uploaded files with expiration countdown

Testing the full checkout flow

To test the complete upgrade experience with Polar's sandbox:

1. Create a product in Polar sandbox:

Go to sandbox.polar.sh and create a "Premium" product:

- Navigate to Products → Create Product

- Name: "Premium File Sharing"

- Price: $9.99/month (or any amount)

- Copy the product ID (looks like

279910fa-6a8c-46d0-b196-84e98a891018)

2. Update your frontend to use your product ID:

Edit frontend/assets/index.html and replace the product ID in the upgradeToPremium function:

productId: '279910fa-6a8c-46d0-b196-84e98a891018', // Your actual product ID

3. Test the upgrade flow:

- Try to upload a file over 100MB as a free user

- See the upgrade banner appear

- Click "Upgrade for $9.99/month"

- You'll be redirected to Polar's sandbox checkout

- Use a test card like

4242 4242 4242 4242 - Complete the checkout (no real money charged!)

- Polar sends a webhook to your backend

- Try uploading the large file again - it should work!

This demonstrates the complete payment flow end-to-end using Polar's sandbox environment. Your users see a real checkout page, complete a real payment flow, and get upgraded to premium - all without processing actual payments.

Deployment

Deploy your file sharing service:

git add .

git commit -m "Add file sharing with Polar payments"

git push encore

Set your production secrets:

encore secret set --prod PolarAccessToken

encore secret set --prod BetterAuthSecret

Configure your Polar webhook URL in the Polar Dashboard:

https://your-production-domain.com/webhooks/polar

Important: Implement webhook signature verification to ensure requests are from Polar.

Note: Encore Cloud is great for prototyping and development with fair use limits. For production workloads, you can connect your AWS or GCP account and Encore will provision infrastructure directly in your cloud account (S3/Cloud Storage buckets, RDS/Cloud SQL databases, IAM roles). You can also self-host using Docker.

Advanced features

Automatic file cleanup

Add a cron job to delete expired files:

// files/cleanup.ts

import { CronJob } from "encore.dev/cron";

import { uploads } from "./bucket";

import { db } from "./db";

import log from "encore.dev/log";

// Run cleanup daily at 2 AM

const cleanupExpiredFiles = new CronJob("cleanup-files", {

title: "Clean up expired files",

schedule: "0 2 * * *",

endpoint: async () => {

// Get expired files

const expiredFiles = await db.query<{

id: string;

storage_key: string;

}>`

SELECT id, storage_key

FROM files

WHERE expires_at < NOW()

`;

let deletedCount = 0;

for await (const file of expiredFiles) {

// Delete from bucket

await uploads.remove(file.storage_key);

// Delete from database

await db.exec`DELETE FROM files WHERE id = ${file.id}`;

deletedCount++;

}

log.info("Cleaned up expired files", { count: deletedCount });

},

});

Download tracking

Track download counts for analytics:

// Add to files table migration:

// ALTER TABLE files ADD COLUMN download_count INT DEFAULT 0;

// In download.ts, increment counter:

await db.exec`

UPDATE files

SET download_count = download_count + 1

WHERE id = ${fileId}

`;

Usage-based billing

Charge based on total storage or bandwidth:

// Track total bytes stored per customer

const storageStats = await db.queryRow<{ total_bytes: number }>`

SELECT SUM(size_bytes) as total_bytes

FROM files

WHERE uploaded_by = ${customerId}

AND expires_at > NOW()

`;

// Report to Polar for usage-based billing

await polar.subscriptions.recordUsage({

subscriptionId: subscription.id,

quantity: Math.ceil(storageStats.total_bytes / (1024 * 1024 * 1024)), // GB

timestamp: new Date().toISOString(),

});

File sharing permissions

Add sharing links with passwords:

// Add to files table:

// ALTER TABLE files ADD COLUMN share_password TEXT;

// Generate protected share link

export const createShareLink = api(

{ expose: true, method: "POST", path: "/files/:fileId/share" },

async (req: { fileId: string; password?: string }) => {

const shareToken = generateRandomToken();

await db.exec`

UPDATE files

SET share_password = ${req.password || null}

WHERE id = ${req.fileId}

`;

return {

shareUrl: `https://yourapp.com/shared/${shareToken}`,

};

}

);

Before you deploy to production

Before going live, update these development defaults to production-ready configurations:

Security:

- Enable email verification: Change

requireEmailVerification: falsetotrueinauth/better-auth.ts - Use Encore secrets: Replace hardcoded

authSecretwithsecret("BetterAuthSecret")() - Verify webhook signatures: Implement Polar webhook signature verification in your webhook handler

- Production API keys: Run

encore secret set --prod PolarAccessTokenwith your production Polar API key

Infrastructure:

- Add file cleanup: Create a cron job that deletes expired files from object storage to manage costs

- Configure CORS: Update

encore.appwith your production frontend domain if using separate frontend - Rate limiting: Add upload rate limits to prevent abuse (use Encore middleware)

Monitoring:

- Set up alerts: Configure alerts in Encore Cloud for failed uploads, webhook errors, and storage usage

- Log aggregation: Enable log streaming to your preferred logging service

- Error tracking: Integrate error monitoring (Sentry, Rollbar, etc.)

Example file cleanup cron job:

// files/cleanup.ts

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

import { db } from "./db";

import { uploads } from "./bucket";

// Run daily at 2 AM to clean up expired files

const _ = new CronJob("file-cleanup", {

title: "Clean up expired files",

every: "24h",

endpoint: cleanupExpiredFiles,

});

export const cleanupExpiredFiles = api({}, async (): Promise<{ deleted: number }> => {

const expiredFiles = [];

const rows = db.query`

SELECT id, storage_key

FROM files

WHERE expires_at < NOW()

`;

for await (const row of rows) {

expiredFiles.push(row);

}

// Delete from storage

for (const file of expiredFiles) {

await uploads.remove(file.storage_key);

}

// Delete from database

await db.exec`DELETE FROM files WHERE expires_at < NOW()`;

return { deleted: expiredFiles.length };

});

Next steps

- Implement usage-based billing for storage

- Set up file preview generation for images and PDFs

- Add cron jobs to clean up expired files

- Implement rate limiting for uploads and downloads

If you found this tutorial helpful, consider starring Encore on GitHub to help others discover it.

More Articles