Long Term Ownership and Maintenance of an Event-Driven System

The least sexy aspect of building Event-Driven Architectures

The least sexy aspect of any system is long-term ownership and the maintenance burden that comes with it. Event-driven systems are no exception, and by choosing to go down this path, chances are you are going to build more and more microservices and your system is going to grow in complexity.

In this, the final post in this series on building and owning an Event-Driven Architecture (EDA), we will delve into the key aspects of managing an event-driven system; focusing on updating your services and your infrastructure. We'll do this by exploring the challenges that come with both. At times we'll focus on Go specifically, but most of the content is applicable to any language you choose (and any distributed system too).

We will explore the importance of keeping dependencies up to date, making major version updates, maintaining container images and some other areas of interest as we go. This post is by no means conclusive; long-term ownership of software is complicated and could fill entire books (and it has). I have left some further reading recommendations at the end of this article.

Ok, Let's dive in!

Keeping your software up to date

Keeping dependencies up to date

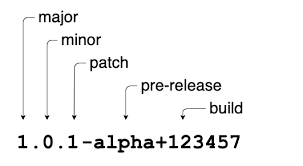

Keeping dependencies up to date is crucial for the long-term health and security of your system. Regularly updating dependencies ensures that you benefit from upstream bug fixes, performance improvements, and security patches. If a library you are using uses Go modules, it will likely be using SEMVER to help both humans and machines figure out the impact of making a change. Semver promotes the following notation:

Major versions typically have breaking changes in terms of API or behavior and patch versions are typically safe to update with minimal testing.

Before you type go get -u, you should ensure you check the release notes.

Many libraries publish detailed release notes of all the changes in any given version of a library. You should not assume that just because it's a minor version update that there are no breaking changes and I have been burnt multiple times by a misuse of semver.

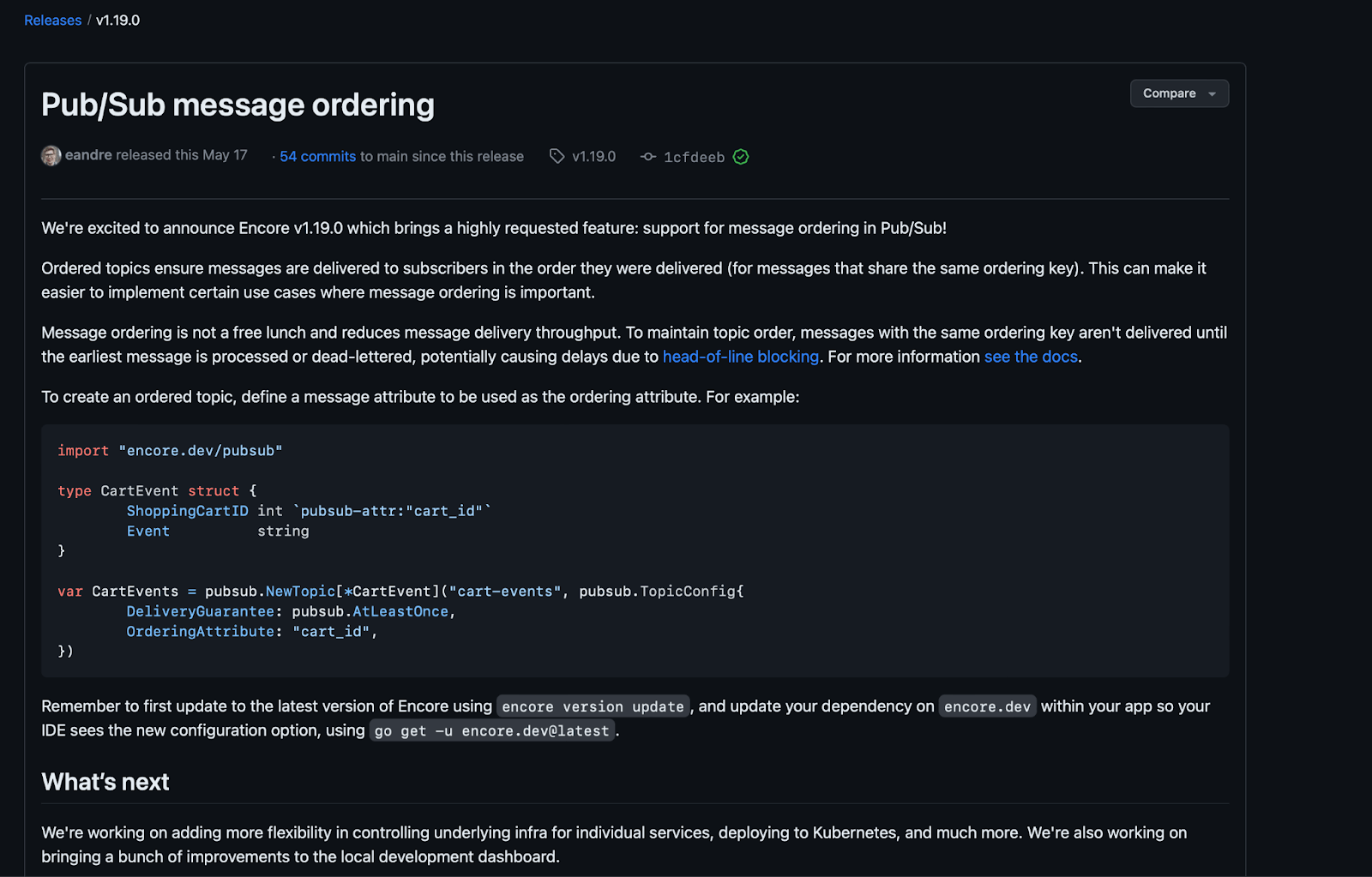

Under the "Releases" tab in Github, you can see a detailed summary of the latest release of a library that you can use to make a decision about whether to update at this time or not.

If it sounds like an update you need, you should ensure you test it (even patch versions) in an isolated way before you ship it to customers.

What about major version updates?

Major updates can be a big challenge as they often make breaking changes to the libraries API. If your code is brittle and not well interfaced, this is where you may feel some pain as you attempt to upgrade. Rigorous testing is necessary here and you should evaluate your current implementation against the new features available; it might be that a "hack" you did previously because the library did not support a specific approach is now one line!

This all sounds like a lot of work and it is. Staying on top of your dependencies can take up alot of time and it is why you will often hear Go developers encouraging people to not use a library unless they have to. Go has a very rich standard library and a lot of things can be built yourself quite easily.

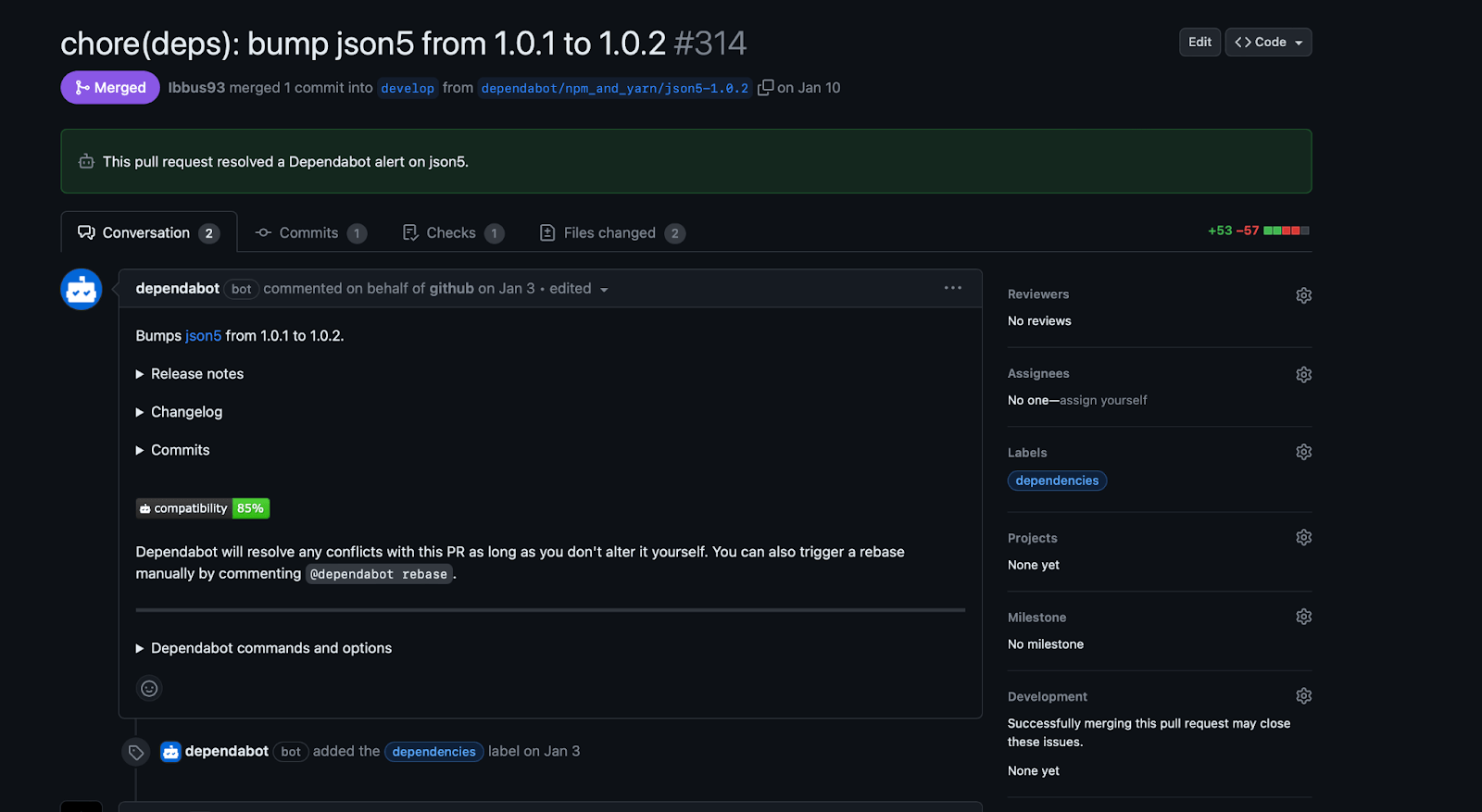

You can ease some of the burden for yourself though using tooling. If you are using GitHub, dependabot can be configured to make automatic PRs to your repo whenever there are dependencies to update. If you're not a GitHub user, you can use renovate which even supports self hosting.

Keeping Container images Up to date

As well as the code itself, if you have opted to run your code in a containerised environment, you must ensure you keep your containers up to date too. If you opt to use a serverless model like Encore has, you push this maintenance burden to the cloud providers which is a major plus.

Keeping your containers up to date is arguably more important than code. Many certifications such as FEDRAMP and PCI compliance insist you have a continuous container scanning process in place which includes steps for remediation.

The same as our code dependencies, container updates can include security patches and bug fixes and improvements. However, they can also include breaking changes and it is crucial you test them thoroughly before putting them into production. Wherever possible, I recommend using the distroless base image which will drastically reduce both your image size, your risk vector, and therefore your maintenance version going forward.

Again, there are options to automate some of the burden here by using tools such as Watchtower.

Handing Contract Changes

In every other article in this series we have mentioned schema changes and the challenges they can present, so I will not labor the point here. Adding new message types, and managing the schema, is one of the maintenance tasks you need to think about when committing to the long term ownership of an event driven system. You may want to think about who is responsible for approving changes and what the SLAs are for that, as well as your companies strategy for deprecating specific fields or message types. If you opt to use Protobuf, it discusses Deprecation as part of the spec here.

On-Call Support

Once you start building an event-driven system, you are signing up for a system with many more moving parts than a monolith. This means it comes with additional complexity (you can read the second article in the series here to help you figure out if this trade off is worth it or not). As the amount of services grows, the ability for a single engineer to retain the entire system in their head, or to figure it out quickly in an incident, becomes difficult.

There is much written about how to build an effective on-call rota and the challenges that come with it. I particularly like this post from Atlassian, as well this great blog post Monzo wrote about how they do this in practice. The important takeaways relevant for this article are:

- On-call is a maintenance burden and should be treated as such; don't expect an on-call engineer to be as effective as they would be if not on-call.

- Ensure you take the learnings from incidents and make time to improve your system and processes. This will make your event-driven system more resilient, as well as ensure that your engineers are happy and productive.

- Invest in on-call tooling, in the previous article here, Elliot discussed Jaeger which helps with visibility. Alongside Prometheus, Grafana and Pagerduty, you have the beginnings of a great observability/alerting stack.

Keeping your infrastructure up to date

As well as keeping your software up to date, you need to ensure you have a plan to update your infrastructure. Even in managed environments when a lot of the maintenance burden is managed for you, there are still decisions to be made to ensure you can upgrade your cluster with minimal to no impact. For example, a user asked in the AWS forums: "How big is the risk when upgrading (managed) kafka (between) versions?". The answer states you can get 0 downtime as long as you have a highly available Kafka cluster which means:

- You run Kafka in three Availability Zones.

- The Replication factor of Kafka is at least three.

- CPU is under 60% utilization.

- You have tested the change in a staging environment to ensure that the cluster upgrade doesn't break the client libraries you are using.

This activity is certainly not free, even if all you have to do is the final step. If you chose to run your own event router after reading Michael's article, then this upgrade process gets even more expensive as you'll need to come up with a plan to upgrade it yourself, that includes a rollback plan in case something unexpected happens.

Another challenge you will face when running upgrades is a human one: coordination. Ideally you want to run upgrades when the risk is as low as possible for customer impact. For some companies that have global customer bases, this is basically never. You may also need support from various development teams/devops teams (depending on how your company is structured) and they will have their own priorities and may also be located in different time zones.

There is no silver bullet here; the trick is to communicate, communicate and communicate. If you think you are annoying people because you're sending too many emails or too many messages, that is probably the right amount.

The above does not just apply to the event-router; the same upgrading philosophy needs to be applied to databases and, if you're using it, kubernetes.

As a general rule, you want to get into a habit of running these upgrades at least every few months or so. It seems counter-intuitive, but the more regularly you upgrade, the more risk you remove from the process, as you will become more skilled at doing it.

Infrastructure as Code

One strategy to make managing your infrastructure and upgrades as simple as it can be is to codify your infrastructure using what is often called Infrastructure as Code (IaC). Upgrades can be as simple as making a Pull Request, and rollbacks can be reverting the same PR.

By using a IaC tool such as Terraform, you describe the end state of your infrastructure and the tool will take care of making that happen. This reduces the likelihood of human error and also means infrastructure can be reviewed the same way as any piece of code, increasing collaboration.

The biggest downside to this approach is the learning curve that comes with it. Most of these tools have their own language you must use, and for each piece of infrastructure you want to integrate with, you must hope they have an API (called a Terraform provider) that can be used to provision it. If they do not, you will have to write your own.

Furthermore, as the complexity of your infrastructure increases, managing it solely with an IaC tool can become challenging. These tools are primarily focused on the provisioning and managing of infrastructure resources but typically do not provide support for application-level orchestration or complex deployment scenarios. You'll therefore end up layering another tool on top of this to achieve it.

Wrapping Up

Maintaining any software system is hard. As well as all of the above, you still have to find time for your team to ship new features, fix bugs and deal with customer issues. As a general rule, you should expect to spend 20-30% of your time on software maintenance and should plan for that accordingly. If you are not, chances are you are accruing the dreaded technical debt that is going to come with a much larger cost later.

This wraps up our 4 part series on Event-driven Architecture. We hope you have enjoyed it and found it useful. Please let us know what you think!

If you missed the previous parts, check them out:

- Part one: What is an EDA and Why do I need One?

- Part two: Making a Business Case for an EDA

- Part three: Building for Failure

If you have questions or feedback, please reach out on Discord, via email at [email protected], or @encoredotdev on Twitter.

About The Author

Matthew Boyle is an experienced technical leader in the field of distributed systems, specializing in using Go.

He has worked at huge companies such as Cloudflare and General Electric, as well as exciting high-growth startups such as Curve and Crowdcube.

Matt has been writing Go for production since 2018 and often shares blog posts and fun trivia about Go over on Twitter (@MattJamesBoyle).

If you enjoyed this blog post, you should checkout Matt's book entitled Domain-Driven Design Using Go, which is available from Amazon here.

More Articles