Encore's MCP Server lets AI tools introspect your application

A local server that gives LLMs full access to your app's APIs, infra, and traces

Large Language Models (LLMs) are becoming more powerful, but without context, they're limited in how much they can do. The Model Context Protocol (MCP) is a new open standard for how applications provide context to LLMs.

Starting in Encore v1.47.0, an MCP server is available for all Encore applications. You run it locally with encore mcp start, which makes your app accessible to any MCP-compatible tool, such as Claude Desktop, IDEs like Cursor, or other AI-powered assistants.

The MCP Server enables AI tools to deeply introspect your application to understand its architecture, APIs, infrastructure, and runtime data in a structured way.

This means AI tools can more accurately generate code, validate changes, and help with other development tasks like debugging.

Example

With the MCP Server, you can now ask Cursor's agent mode to perform advanced actions, such as:

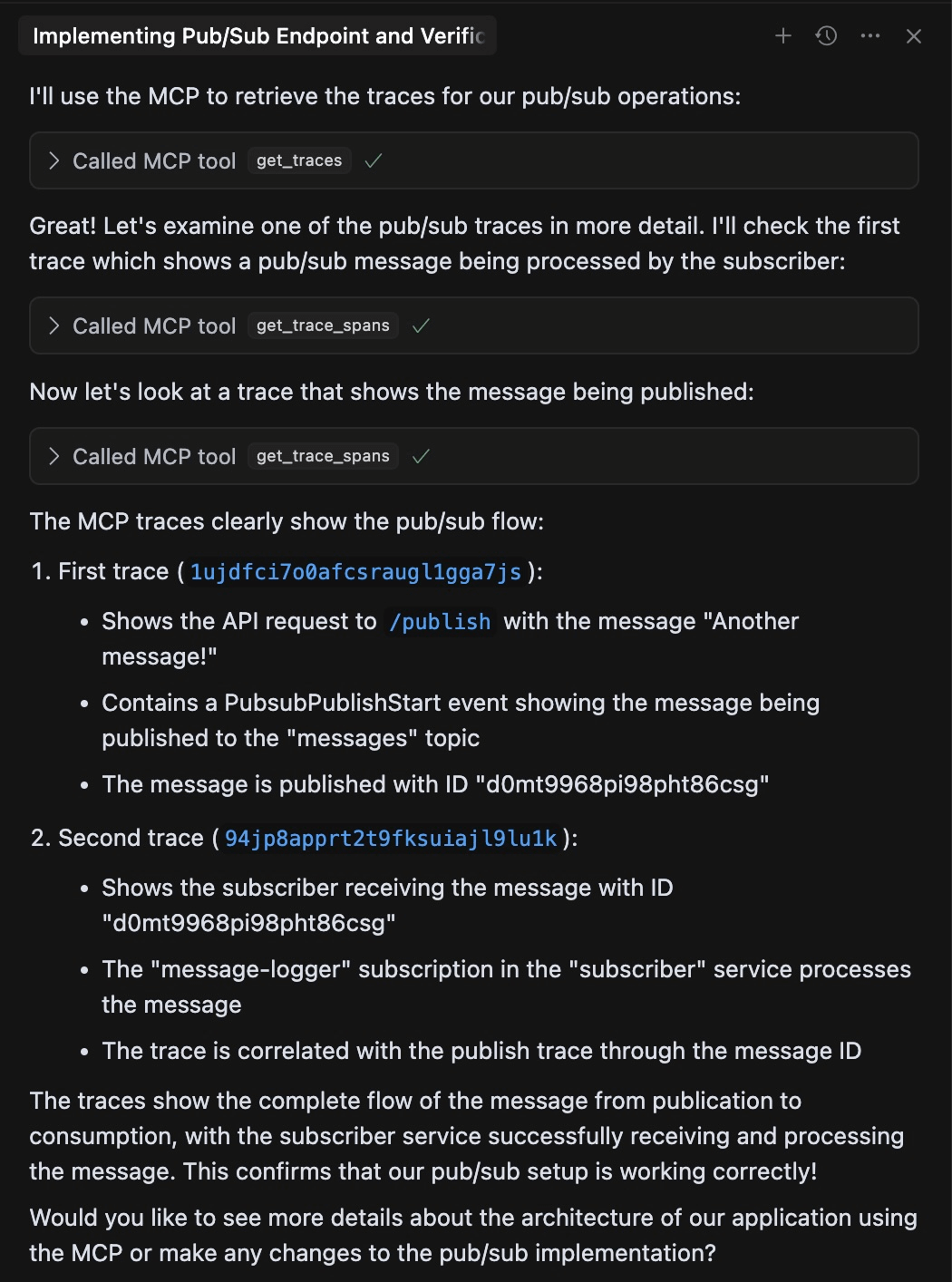

"Add an endpoint that publishes to a pub/sub topic, call it and verify that the publish is in the traces"

Cursor's agent will implement the feature, look up any unfamiliar conventions in Encore's documentation, start the local application to call the API, and use the MCP Server to fetch and analyze traces.

Want to try it?

It's all open-source, try it yourself by installing Encore.

Other uses

There are many ways you can leverage the MCP server to improve your workflow when using AI development tools:

1. Higher-quality agentic code generation

With structured access to your app's APIs, services, infrastructure, and traces, AI can generate code that’s better aligned with your architecture and implementation patterns.

2. Context-aware design suggestions

Need to add a new service or refactor an existing one? With visibility into your app’s architecture and infrastructure, AI can propose changes that respect system boundaries and dependencies.

3. Faster debugging with trace and infra context

When debugging, AI can use local trace data, database schemas, to help you pinpoint issues and suggest relevant fixes, all based on the actual behavior of your local app. It can also call endpoints directly and help you understand the results by interpreting the corresponding traces.

How it works

Encore understands your application by using static analysis. This is a fancy term for parsing and analyzing the code you write, to create a graph of how your application works. This graph closely represents your own mental model of the system: boxes and arrows that represent systems and services that communicate with other systems, pass data and connect to infrastructure. We call it the Encore Application Model.

Encore's MCP server exposes "tools" that are built on top of this model, to enable LLMs to understand your application's structure, retrieve relevant information, and take actions within your system.

In this first release, the following tools are exposed via the MCP Server:

Database Tools

- get_databases: Retrieve metadata about all SQL databases defined in the application, including their schema, tables, and relationships.

- query_database: Execute SQL queries against one or more databases in the application.

API Tools

- call_endpoint: Make HTTP requests to any API endpoint in the application.

- get_services: Retrieve comprehensive information about all services and their endpoints in the application.

- get_middleware: Retrieve detailed information about all middleware components in the application.

- get_auth_handlers: Retrieve information about all authentication handlers in the application.

Trace Tools

- get_traces: Retrieve a list of request traces from the application, including their timing, status, and associated metadata.

- get_trace_spans: Retrieve detailed information about one or more traces, including all spans, timing information, and associated metadata.

Source Code Tools

- get_metadata: Retrieve the complete application metadata, including service definitions, database schemas, API endpoints, and other infrastructure components.

- get_src_files: Retrieve the contents of one or more source files from the application.

PubSub Tools

- get_pubsub: Retrieve detailed information about all PubSub topics and their subscriptions in the application.

Storage Tools

- get_storage_buckets: Retrieve comprehensive information about all storage buckets in the application.

- get_objects: List and retrieve metadata about objects stored in one or more storage buckets.

Cache Tools

- get_cache_keyspaces: Retrieve comprehensive information about all cache keyspaces in the application.

Metrics Tools

- get_metrics: Retrieve comprehensive information about all metrics defined in the application.

Cron Tools

- get_cronjobs: Retrieve detailed information about all scheduled cron jobs in the application.

Secret Tools

- get_secrets: Retrieve metadata about all secrets used in the application.

Documentation Tools

- search_docs: Search the Encore documentation using Algolia's search engine.

- get_docs: Retrieve the full content of specific documentation pages.

Example: Integrating with Cursor

Cursor is one of the most popular AI-powered IDE's, and it's simple to use Encore's MCP server together with Cursor.

Just create the file .cursor/mcp.json with the following settings:

{

"mcpServers": {

"encore-mcp": {

"command": "encore",

"args": ["mcp", "run", "--app=your-app-id"]

}

}

}

Learn more in Cursor's MCP docs

It's early days

This is just an initial release and we think we're only scratching the surface of what's possible when giving AI tools the capability to deeply and accurately understand your applications.

We think there's a lot more to do in this space, but we're now confident that building applications on top of a declarative framework, like Encore, is a foundational and powerful enabler as AI tooling matures.

Watch this space for more.

More Articles