Keeping secrets from your AI agent

Your backend architecture determines what AI tools see, send, and write.

AI coding tools need to read your codebase to be useful, and they'll read as much of it as they can. In most backend projects, that includes Terraform configs with AWS account IDs, CI/CD pipelines with deployment credentials, Docker Compose files mapping out internal service topology, and sometimes .env files that weren't meant to be read at all.

Most teams handle .env with a secrets manager or cloud vault and keep production credentials off developer machines entirely. But not every project has that setup, and even local dev environments often end up with API keys and database passwords on disk. The infrastructure config is harder to deal with. It's committed to the repo because it needs to be, and there's no gitignore fix for files that belong there.

.gitignore can protect uncommitted files like .env, but it's not bulletproof. Some tools don't respect it, and there've been cases where developers had to rotate entire credential sets after discovering their .env was indexed anyway (here's one example).

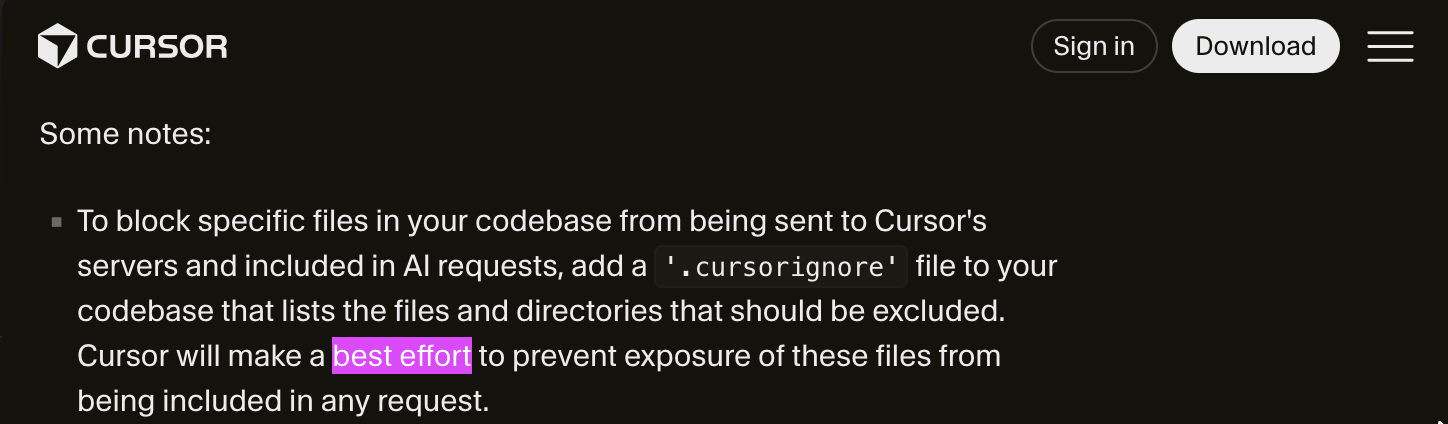

The tools themselves offer exclusion mechanisms, but in practice these are application-layer filters, not access controls:

- Cursor has

.cursorignore, but their own security page describes it as "best effort." - Copilot has content exclusion policies, but they don't apply at all in Agent mode, Edit mode, or Copilot CLI.

- Claude Code has

.claudeignoreand deny rules, but they only block specific tools. Acat .envthrough bash reads the file anyway.

There have been multiple CVEs across these tools for bypasses through symlinks and case sensitivity.

These mechanisms are better than nothing, but they're not a security boundary.

Even when all of this works correctly, it only covers uncommitted files. Terraform configs, CI/CD pipelines, and Docker Compose definitions are committed on purpose because that's how infrastructure-as-code works, and there's no mechanism to keep them out of the AI's context. The only way to change what the AI sees is to change what's in the repository.

Architecture determines exposure

The difference comes down to where infrastructure state is managed. In a traditional setup, it's managed through config files in the repository. With an infrastructure-from-code approach like Encore, you declare what your application needs as typed code in your application, and the platform provisions the actual infrastructure based on those declarations. Connection strings, instance configurations, and cloud credentials are resolved at runtime and never appear in the repository.

With Encore, the LLM sees a typed declaration that a service uses a Postgres database called payments. That's enough context to generate queries, write migrations, and build endpoints, without ever seeing the password, connection string, or AWS configuration. The same applies to pub/sub topics, caches, cron jobs, and object storage, which are all typed declarations that give the AI full context on what the system does without exposing where or how it runs.

Where the data ends up

What's in the repository also determines what sensitive data leaves your machine. When the AI indexes your project, those files get sent to whatever model provider the tool is configured to use. Providers have data processing agreements and security certifications, but the files still end up in a third-party system.

For teams operating in regulated industries, this is becoming a real concern. GDPR, HIPAA, LGPD, and SOC 2 all require organizations to track and disclose third-party data processing, and if credentials in the repo can access systems with personal data or health records, those credentials are now in a model provider's infrastructure.

You don't need to be building under HIPAA for this to matter. If your repo has AWS credentials, database passwords, or deployment keys, those are sitting in a model provider's system the moment you open the project. An architecture that keeps infrastructure configuration out of the repository reduces that surface entirely, and for teams that do operate in regulated industries, it also removes a compliance problem that would otherwise require disclosure and documentation.

What this means going forward

AI tools also pick up patterns from the code they index. If your codebase is full of process.env.DATABASE_URL and manual connection string handling, generated code follows the same approach, which means more code touching credentials, more surface area for leaks, and more secrets to manage and rotate when something goes wrong. A codebase with typed infrastructure declarations and no credentials doesn't have this problem.

These tools are going to read more of your codebase, not less. Context windows keep growing, agents are starting to run commands and call APIs directly, and nobody has asked whether sending repository contents to a model provider counts as third-party data processing under GDPR or HIPAA. For most backend teams, their AI coding tool is an undisclosed data processor. At some point, a regulator will notice, and it's better to have the answer ready than to start looking for one.

Encore is an open-source backend framework where infrastructure is declared in TypeScript or Go and provisioned from the code. GitHub.

More Articles